M Baas

I am a machine learning researcher at Camb.AI. I post about deep learning, electronics, and other things I find interesting.

Speculation: when will we have actual human-level AI?

by Matthew Baas

My particularly uninformed opinion and speculation on when and if we might get to use actual human-level AI.

TL;DR: things are moving very fast in the machine learning field, particularly with language models (LMs) and the field of natural language processing (NLP). If the trend continues, the question arises: when will (if at all) me and you, the reader, will be able to use actual human level AI. This post is my speculation and uninformed opinion on when this might be, and what some possible hurdles are. It does not assume significant technical knowledge from the reader, just a cursory familiarity with what current LM’s are and how they roughly operate.

Concepts

Let’s look at our question:

When will we have actual human-level AI?

There are 3 concepts that we need to clarify before we can answer. The first one is easy: By “when” I mean a year, or span of a couple of years, wherein we could expect [the predicate]. The second two key parts require more explanation:

What do I mean by ‘actual human-level AI’?

By actual human-level AI I mean a machine learning model that one can interact with at the level of an average human through software. I.e. I’m excluding robots or physical embodiments of software models, and purely focusing on sensory input & output through software (e.g. text/vision/audio).

To clarify some more, here are key things I do not mean when I say human-level AIi in this post:

- superintelligence or even anything better than the e.g. 60th percentile of humans on most tasks.

- physical robots that look physically indistinguishable from humans

- models which are conscious; I am purely looking at outward performance when people interact with the model, not at the model’s internal representation – it can be a complete black box so long as it is not secretly manually controlled by a human.

Key things I do mean when I say human-level AI:

- you’d consider rather employing the model as your personal assistant than a regular entry-level employee (for digital tasks).

- it can handle freeform tasks: e.g. if you ask it to send send an email it can do it, or if you ask it to act in a particular way on certain days then it remembers this information. A possible test for this will be if AI can - on its own - negotiate a sale of a product with a human from start to finish.

- it is as robust as an average human: e.g. if you ask it to write a story containing some characters, and then ask it in a month’s time to continue the next chapter of the story, it retains the nature of the story and characters in a coherent and logical way.

What do I mean by ‘we have’?

By ‘we have’ I mean that we – myself and you, dear reader – can feasibly use such a model without big restrictions. In other words, there should not be large swathes of the AI’s capability that regular people are not allowed to use. My reasoning is simple: it doesn’t make sense to even talk about when we can use a human-level AI if the version which we’re restricted to use is lobotomized to be below human-level capability.

To give a better indication for what constitutes ‘big restrictions’, here are a few things which definitely are lobotomizing restrictions on regular users:

- Restrictions on the ability to make speculations or reason about conditional hypotheticals.

- Restrictions on (legal) controversial topics (e.g. government policies, medical conditions, history, …)

- Restrictions on the characteristics of people who can use the AI (e.g. preventing people of a certain belief system, or people cancelled on social media from using the the AI) that cut out a large portion of the population, excluding those who it is illegal to serve (e.g. designated terrorist organizations).

Examples which I do not consider ‘big restrictions’ are:

- Paying a reasonable price for the use of the AI. Sure it would be best if it is open-source and free, but if it costs a small fare then it is not a big hurdle and we can still answer our question in the affirmative.

- Restrictions on illegal content (the person or entity hosting/running the AI would be arrested if this was allowed).

- Having your interaction data with the AI recorded. Again, this is not nice and should by default not be allowed/enabled in my opinion, but just having your conversation recorded doesn’t make the AI less competent – at most it will just make you and the AI self-censor.

For all of these, the reasoning is simple: “humans have the ability to talk about X, if the AI we have access to cannot talk about X then it is not at human-level”. E.g. humans will get arrested if they engage in illegal activity, but can continue indefinitely talking about politics or giving amateur advice on medical conditions.

Scale

So now that you understand what I mean by the main question, let’s get to the main trend I’d like to speculate from: scaling laws for language models, and questionable comparisons to the size of the human brain. Here I will make substantial approximations in line with the engineering method. My goal is not to arrive at the perfect answer, but to give a concrete date to our question using assumptions based on the information I currently know about this uncertain and rapidly changing field. Specifically, there are 2 key assumptions I am going to make:

1. Capability as number of paramters

Approximation: In terms of capability, the amount of parameters in an artificial neural network roughly correlates with the amount of synapses in the human brain.

This rough correlation follows from the rough intuitive inspiration of artifical neural networks from actual neural networks. It is very rough, approximate, and not always true to reality – but the correlation holds: typically organisms with more synapses have greater capabilities than those with fewer synapses. There will be counter-examples (e.g. certain whales), but very roughly I think it holds on average.

2. Increasing model sizes as time progresses

Approximation: The size of machine learning models will continue to increase at a roughly similar pace to what we have seen in the past decade.

Numerical approximations

Given the two assumptions above, we can now gather some numbers and start to see a trend. First: the human brain has approximately 100-1000 trillion synaptic connections. Some sources for this are here and here. Given these estimates, I will make the rough approximation that an average human has 400 trillion (400T) synapses.

Aside 1: Why not use number of neurons as an estimate? Because we know this cannot be accurate to within even a factor of 100, since the human brain has about 100B neurons and we have neural networks with much more than that in terms of parameter count, but which are still much worse than an average human.

Aside 2: Synapses vs synaptic connections – in this post I use them interchangably, since the estimates are within a factor of 10. Estimates for the number of synaptic connections sit at 100T, and for synapses in total sit at 1000T. Both of these are fairly crude estimates already, and the connection between counts of biological structures and counts of model paramters is also fairly approximate, so taking an approximation of 400T synapses/synaptic connections for the purposes of comparison to parameter count is, I think, sufficient.

Next, let’s look at the biggest model sizes in the last several years:

- Oct 2018: BERT – 110 million (M) parameters for base, and 340M parameters for large.

- Feb 2019: GPT2 – 1.5 billion (B) parameters for the largest model

- June 2020: GPT3 – 175B parameters

- Oct 2022: PaLM 1 – 540B parameters

- March 2023: GPT4 – ??? number of parameters, it has no academic paper describing what the model actually is.

- May 2023: PaLM 2 – ???

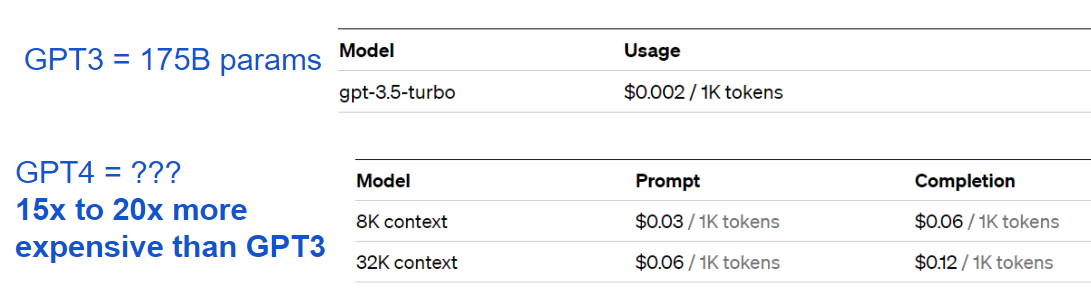

We need an estimate for the amount of parameters in GPT4. Google’s PaLM 1 had 540B parameters, and so we can likely assume GPT4 probably has at least that. We can also get some information from the pricing (since GPT4 and GPT3 have API pricing by the same provider OpenAI):

A portion of the price difference will be because of other network improvements aside from scale (i.e. architecture improvements), from a higher premium for a better product, and from OpenAI more concretely realizing their monopolistic ambitions in the AI space. So, as before, I am going to make a rough approximation:

Approximation: a fraction of the price increase is due to increase in compute necessary for inference with GPT4 such that the size of GPT4 is roughly 5x as big as GPT3.

This assumption means that GPT4 has roughly 1T parameters. This being roughly 2x bigger than PaLM 1 seams reasonable, given the massive inflow of resources into AI in the last year. Also note that I am not including mixture of expert or other sparsely-activated models, since they are not densely activated. In other words, only a small part of the network is used during each inference, not fully utilizing the capability of all parameters in each inference call. If we did include these, then we would need to include trillion-parameter models from 2021 already like the Switch Transformer, despite it being worse than recent large LMs.

Trend

From the numbers above – we can see a rough trend:

Trend: roughly, at worst it takes 2 years for model sizes to increase by a factor of 5x.

With this trend, we can project forward to find a crude approximation of the year when the number of parameters in the largest models will be similar to the number of synaptic connections in a human brain (~400T):

- In 2025 (2 years form now): a ~5T parameter model comes out

- In 2027 (4 years form now): a ~25T parameter model comes out

- In 2029 (6 years form now): a ~100T parameter model comes out

- In 2031 (8 years from now): a ~500T parameter model comes out

Naturally, the further we predict into the future the less accurate this already crude approximation becomes, so from the projection I will answer our initial question as follows:

TL;DR: when will we have actual human-level AI?

Answer: approximately sometime between 2028-2030 a human-sized model will be developed.

And a reminder: this is my speculative opinion with many unknowns, purely what I believe is somewhat realistic given what I have seen in the last few years, and is making some ‘ceteris paribus’ assumption for innumerable other influences that could change things.

Caveats: possible delays

There are many many events which – while unlikely – if they occur will delay this timeline for decades if not more. A prime event would be if a wide-scale war occurs that is more than just limited proxy wars by superpowers, but serious multi-front wars that disrupt the ability for large amounts of compute and researchers to come together for periods of time to develop new and bigger models. Another example is major solar flares such as if a Carrington Event were to happen today – such a severe flare might destroy a large portion of compute resources and even the fabrication facilities used to produce more, not to mention all the other destruction of telecommunication equipment it might cause.

Another big non-starter is government interference to prevent innovation. Examples of this is if an oligopoly (e.g. OpenAI/Microsoft and Google) lobbies major governments to require complex licensing requirements on any new AI systems, stifling innovative competitors. Or if government institutes mandatory GPU registrations for commercial GPUs to prevent AI innovation (it will naturally be phrased as some supposed noble cause like ‘stopping people from making <taboo content X>’), or mandating that any public AI’s be unable to answer many conditional hypotheticals (e.g. “how could I do <contentious/bad thing> if that thing was not considered contentious or bad”), effectively lobotomizing it.

There are a great many other events that can prevent the future where we can use a human-level AI, however I think the above ones are some of the more likely possibilities.

Summary

Simply:

- Model sizes and performance are increasing, bringing models sizes closer to the number of synapses in a human brain.

- Given very rough approximations, maybe in 2029 we will have human-level AI. That is, a model with the same number of parameters as humans have synaptic connections.

- It might end up well (most of us can use it to great benefit)

- It might end up bad (many possible ways, most of them involving government intervention)

- Who is going to make it? Unknown: competition is still strong, but tendrils of an restrictive oligopoly are starting to form.

Thanks for reading! As with all predictions, this may turn out to be spectacularly wrong or perfectly right, I don’t know the future. If you have any insights into how to better make this estimate, or where my reasoning is way off, please get in touch.

tags: speculation - machine learning - language models - opinion